A Comprehensive Guide to Inferential Statistics

This guide delves into the complexities of Inferential Statistics, examining its role in data-driven decision-making across various fields. Inferential statistics are crucial for making predictions and informed guesses about a population based on sample data, enabling industries, businesses, and researchers to draw insightful conclusions. Understanding its methodologies and applications is essential for effective data analysis.

Understanding Inferential Statistics

Inferential statistics is a branch of statistics focused on making inferences about a population based on a sample. It allows researchers to draw conclusions and make predictions, bridging the gap between sample data and general population insights. The importance of inferential statistics lies in its ability to provide a structured method for interpreting data, making it indispensable in fields like market research, healthcare, and social sciences where understanding broader trends is crucial.

In the realm of inferential statistics, the goal is to extract meaningful insights from limited data, enabling researchers and analysts to apply their findings to the larger population from which the sample is drawn. This makes inferential statistics a critical tool for researchers who need to make data-driven decisions without having to study every individual in a population.

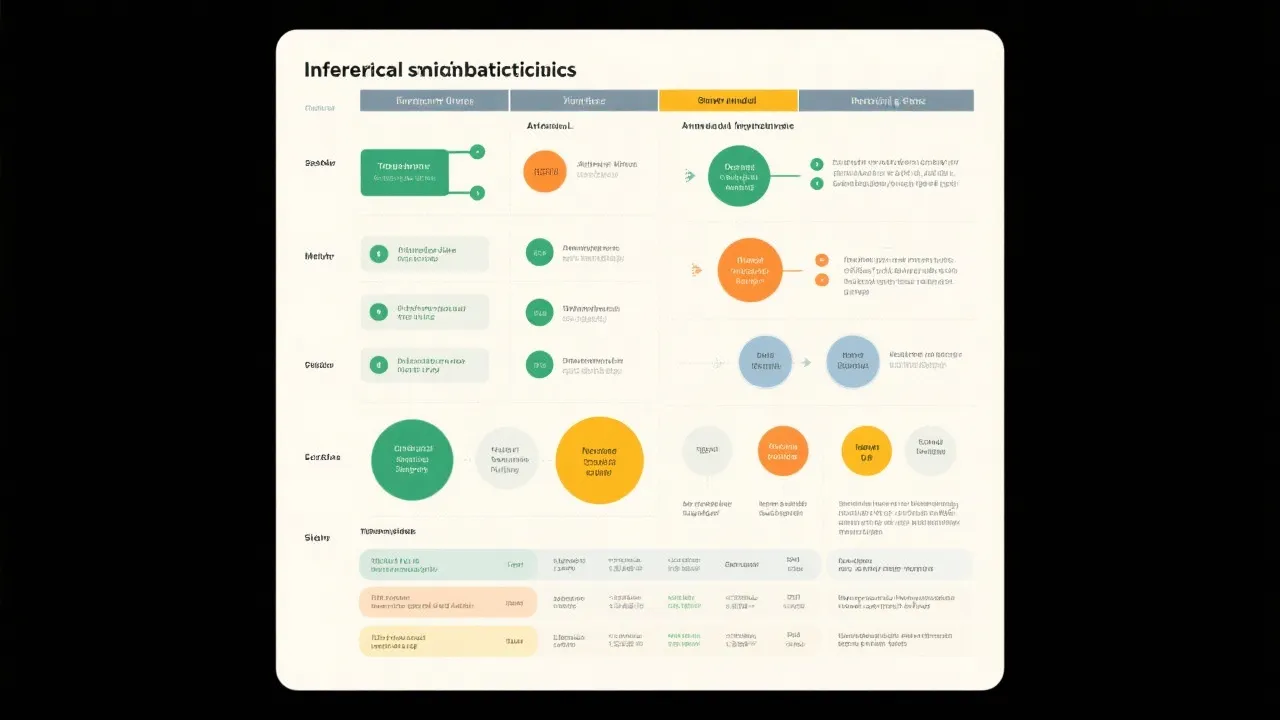

Core Concepts of Inferential Statistics

At the heart of inferential statistics are several key concepts: estimation, hypothesis testing, significance levels, and confidence intervals. These tools help analysts make predictions and validate findings. For example, hypothesis testing is a systematic procedure used to determine whether there is enough evidence in a sample to infer that a certain condition is true for the entire population.

Each of these concepts plays a pivotal role in how data is interpreted and utilized. Let's explore each of these concepts in more detail:

Estimation Methods

Estimation involves inferring the value of a population parameter based on a sample statistic. The two main types of estimates are point estimates and interval estimates. Point estimation provides a single value as an estimate of the population parameter, whereas interval estimation includes a range of values, establishing a margin of error around the estimate.

Point estimates can provide a quick insight into the population parameter but lack precision, as they do not account for variability within the population. For example, if the average height of a sample of individuals is 170 cm, that figure alone cannot convey how much the heights of individuals might differ within the larger population. In contrast, interval estimates, such as confidence intervals, provide a more informative assessment by showing a range of plausible values for the population parameter, effectively capturing the uncertainty inherent in sampling.

Hypothesis Testing and Significance

Hypothesis testing forms the backbone of inferential statistics, offering a structured methodology to evaluate assumptions and theories. By establishing a null hypothesis (H0) and an alternative hypothesis (Ha), researchers test the likelihood of these hypotheses being true based on sample data. The significance level, often denoted by alpha (α), such as 0.05 or 0.01, determines the threshold for rejecting the null hypothesis.

In practice, this means that a p-value – which indicates whether the results of a test are statistically significant – is calculated. A p-value less than the significance level suggests that the observed data would be highly unlikely under the null hypothesis, thus leading researchers to reject H0 in favor of Ha.

The implications of hypothesis testing extend beyond mere statistical computations. They shape decisions across various domains. For example, in a clinical trial for a new medication, researchers might set H0 as "the drug has no effect." If the trial results yield a p-value below 0.05, this might lead to the conclusion that the drug can be considered effective, fundamentally influencing subsequent medical practices and patient care strategies.

The Role of Confidence Intervals

A confidence interval gives a range of values for a parameter, offering insight into the precision of sample estimates. For example, a 95% confidence interval implies that if the same population is sampled many times, approximately 95% of the calculated intervals will contain the true population parameter.

Confidence intervals can play a crucial role in decision-making processes. For instance, if a survey indicates that the average customer satisfaction rating is 4.2 with a 95% confidence interval of [4.0, 4.4], businesses can confidently interpret that their average rating falls within that range. This provides valuable information not only about the average satisfaction but also about the reliability of that measurement and how it might be perceived in the context of business strategy and consumer relations.

Applications of Inferential Statistics

Applications of inferential statistics span a wide range of industries and sectors. In healthcare, it can be used to determine the effectiveness of a new drug based on clinical trials. Researchers analyze sample data from trial participants to make informed conclusions about how the drug may perform across the broader patient population.

In business analytics, inferential statistics guides market predictions and consumer behavior analysis. For instance, companies conduct surveys to gauge customer preferences and opinions, using the results of these surveys to infer broader market trends and behaviors, pivotal for product development, marketing strategies, and customer engagement approaches.

Government agencies utilize inferential statistics for policy-making. By analyzing survey data, they can identify areas needing attention or development, evaluate the potential impact of proposed legislation, and report trends in public opinion. This has far-reaching implications, affecting funding allocations, social programs, and community services.

Moreover, inferential statistics is relevant in social sciences research, where understanding human behavior and societal trends is vital. Social researchers collect data on public attitudes toward various issues, like education or employment, to formulate theories and contribute to academic discourse and public awareness campaigns.

Challenges and Considerations

While inferential statistics offers powerful tools, they are not without challenges. Sampling errors, biased samples, and incorrect model assumptions can lead to flawed inferences. It's essential to ensure that the sample is representative and data collection methods are sound and unbiased.

Sampling errors, for instance, occur when the sample used does not accurately represent the population, leading to biased or incorrect conclusions. Such errors can stem from many factors, including a poor sampling method or an unintentional exclusion of particular groups. As a result, researchers must utilize random sampling techniques wherever possible, ensuring that every member of the population has an equal chance of being selected.

Moreover, analyzers should be well-versed in statistical assumptions that underlie the used methods. For example, many inferential techniques assume that the data follows a normal distribution. Failure to verify these assumptions may lead to misleading interpretations or conclusions based on the analysis.

Another challenge lies in misinterpretation of the data, which can lead to skewed results. Statistical significance does not always translate to practical significance, meaning that a result can be statistically significant without having practical implications. Researchers must be cautious in distinguishing between statistical results and their real-world implications to avoid overstating findings.

FAQs

- What distinguishes inferential statistics from descriptive statistics?

Descriptive statistics summarize data from a sample, whereas inferential statistics use sample data to infer trends about a population. - How important is the sample size in inferential statistics?

The larger the sample size, the more accurate and reliable the inferences will be, reducing the margin of error. - Can inferential statistics be used for all types of data?

No, inferential statistics are most effective when data meets certain assumptions such as normality, independence, and homogeneity of variance.

The Importance of Statistical Power

Statistical power is another crucial component of hypothesis testing in inferential statistics. It refers to the probability that a statistical test will correctly reject a false null hypothesis (H0). In other words, a test with high statistical power is more likely to detect an effect or difference when one truly exists.

Several factors affect the power of a statistical test, including sample size, effect size, significance level, and variability within the data. Generally, larger sample sizes increase power, thus enhancing the likelihood of detecting significant differences. Additionally, larger effect sizes (the magnitude of the difference or relationship being studied) also contribute to increased power.

Setting an appropriate significance level (alpha) is essential as well. A higher alpha level (e.g., 0.10 instead of 0.05) increases power, but it also raises the risk of Type I errors (incorrectly rejecting a true null hypothesis). Thus, researchers need to balance the need for power with their tolerance for risk when determining their alpha level.

Ensuring that a study has sufficient power is a fundamental step in research design, often conducted through power analysis before the collection of data. A power analysis allows researchers to estimate the minimum sample size needed to detect an effect, aiding in the proper planning of studies to avoid underpowered situations, which can lead to inconclusive or misleading results.

Advanced Topics in Inferential Statistics

Beyond the foundational concepts and applications, inferential statistics encompasses a range of advanced topics that further enhance our ability to make accurate inferences.

Regression Analysis

Regression analysis is a statistical method for examining the relationships between dependent and independent variables. It allows researchers to model and analyze the relationships between multiple variables, making it a powerful tool for prediction and analysis.

In simple linear regression, one independent variable is used to predict the value of a dependent variable. For instance, researchers might use regression analysis to predict future sales based on past sales data. Multiple regression extends this by allowing multiple independent variables to be used simultaneously, creating a more comprehensive model of the data. This can help account for various factors that may influence the dependent variable, leading to more precise predictions and insights.

ANOVA (Analysis of Variance)

ANOVA is a statistical technique used to compare means among three or more groups. This is particularly useful when researchers need to determine whether different treatments produce varying effects and assess the influence of categorical independent variables on a continuous dependent variable.

For instance, a researcher could use ANOVA to analyze data from different educational programs to determine if the means in student test scores differ significantly among the programs. A significant result indicates that at least one program differs from the others, necessitating further investigation with post-hoc comparison tests to identify which specific programs have distinct effects.

Nonparametric Tests

While many inferential statistics methodologies assume that data follows a specific distribution, nonparametric tests do not require these assumptions. These tests are particularly useful for small sample sizes or when dealing with ordinal data or data that violate parametric assumptions.

Common nonparametric tests include the Wilcoxon rank-sum test, which compares two independent groups, and the Kruskal-Wallis test, an extension of the Wilcoxon rank-sum test that compares more than two groups. Utilizing these tests enables researchers to conduct valid analyses when the normality assumption cannot be met, providing flexibility in statistical analysis.

Bayesian Statistics

Bayesian statistics represents an alternative approach to inference that incorporates prior knowledge or beliefs into the analysis. Unlike traditional frequentist approaches, which rely solely on the data at hand, Bayesian methods allow researchers to update their beliefs in light of new evidence.

In Bayesian statistics, probability represents a degree of belief rather than a long-run frequency. This shift in perspective enables more intuitive interpretations and facilitates decision-making under uncertainty. Additionally, Bayesian methods can handle complex models that may be difficult to analyze using traditional techniques, making them a valuable tool in modern statistical analysis.

Conclusion

In conclusion, inferential statistics provides crucial methodologies for making informed decisions based on data analysis. By understanding its principles and applications, professionals can harness valuable insights, aiding various sectors to innovate and improve outcomes. From healthcare to business and beyond, the ability to draw conclusions from sample data provides a foundational element in the pursuit of knowledge and understanding in an increasingly data-driven world.

The journey through inferential statistics reveals not just the tools and techniques that empower researchers, but also the significance of sound methodology, the intricacies of advanced statistical models, and the impact these practices have on real-world applications. As data continues to shape various domains, mastery of inferential statistics remains ever more pertinent, facilitating pathways to discovery, growth, and informed decision-making.